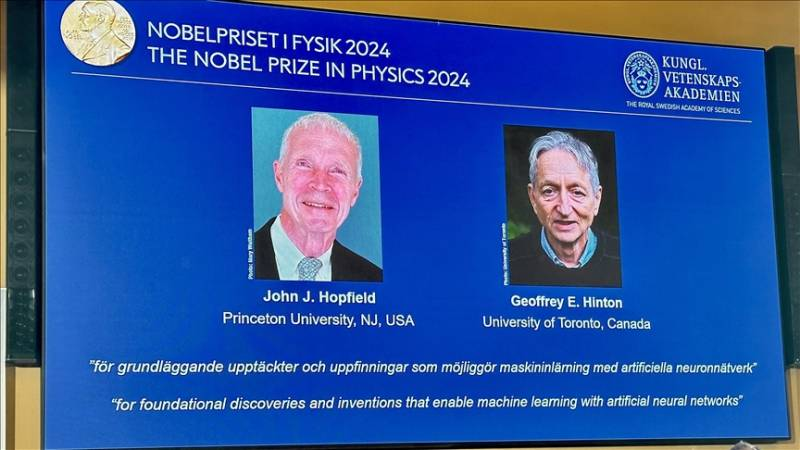

In 2024, Professor Geoffrey Hinton, a name synonymous with Godfather of AI (AI), shared the prestigious Nobel Prize in Physics with Professor John Hopfield for their groundbreaking work on artificial neural networks. Their contributions laid the foundation for the AI systems we see today, from voice assistants to recommendation algorithms.

However, despite his achievements, Hinton has issued a serious warning about the potential dangers of AI, urging society to consider the risks that come with this rapidly evolving technology. As the “godfather of AI,” Hinton’s words carry weight, especially as the power and influence of AI grow at an unprecedented rate.

Read : John Hopfield and Geoffrey Hinton Awarded Nobel Prize in Physics

In this blog, we will delve into Geoffrey Hinton’s concerns, the incredible advancements AI brings to society, and the potential consequences that could arise if AI is not carefully managed.

The Unprecedented Growth of AI

Artificial intelligence is no longer a futuristic concept; it has become a central part of modern life. From smartphones to complex systems managing global industries, AI is everywhere. Geoffrey Hinton, who has spent decades working on neural networks that mimic the human brain’s learning process, acknowledges the profound potential AI has.

Read : Japan is Selling the Electric Spoon for “Healthier Eating”: Here’s How It Works

He compares its influence to that of the industrial revolution, which drastically changed the world by enhancing human physical capabilities. However, AI, he points out, will enhance intellectual abilities, introducing an entirely new paradigm.

“We have no experience in having things which are smarter than us,” Geoffrey Hinton warned during a conference call after receiving the Nobel Prize. He explained that AI will transform productivity and healthcare, making them more efficient and effective.

These are positive aspects of AI that can lead to significant improvements in human life. However, he also emphasized that while AI has the potential to do good, the risks must not be ignored.

The rapid development of AI technology has led to the creation of systems that are capable of performing tasks that previously required human intelligence, such as learning, problem-solving, and decision-making. This progress has sparked concerns about the implications of creating machines that might one day surpass human intelligence. For Geoffrey Hinton, this is where the danger lies.

The Dual Nature of Technological Progress

While AI presents incredible opportunities, it also introduces a range of new risks. Geoffrey Hinton stressed that AI, like any powerful tool, can be used for both good and bad purposes. On the one hand, AI has the potential to solve some of the world’s most pressing challenges, such as improving healthcare, tackling climate change, and revolutionizing industries.

For instance, AI systems can analyze vast amounts of data to discover patterns that can lead to medical breakthroughs or improve efficiency in industries like manufacturing and logistics. AI-powered technologies can also help predict environmental changes and optimize responses to mitigate damage.

However, on the other hand, Hinton is concerned about the unintended consequences of these advancements. “We need to worry about bad consequences,” he cautioned.

The more powerful AI becomes, the more difficult it will be to control, especially as it becomes integrated into critical areas like national defense, infrastructure, and communication systems. The potential for misuse, whether by malicious actors or through unintended programming errors, is a real threat.

One of Hinton’s primary concerns is the possibility of AI systems being used for nefarious purposes. As AI becomes more sophisticated, it could be exploited to create autonomous weapons, invade privacy, or manipulate public opinion.

Additionally, AI could exacerbate existing inequalities by concentrating power and wealth in the hands of those who control the technology. Hinton believes that if we do not approach AI development with caution, it could lead to consequences that we are not prepared for.

Ethical Considerations and Responsible Development

In his warning, Hinton called for increased collaboration between scientists, policymakers, and industry leaders to ensure that AI is developed responsibly. He stressed that it is crucial to address the ethical considerations of AI development before it spirals out of control.

Without proper governance and safeguards, AI could evolve in ways that harm rather than benefit humanity. One of the key issues Hinton raises is the lack of experience humanity has with entities that are smarter than us. AI systems are becoming increasingly capable of learning and making decisions without human intervention.

As these systems become more intelligent, the challenge of keeping them aligned with human values and goals becomes more difficult. Hinton warned that it is essential to establish clear boundaries for AI development to prevent scenarios where machines act in ways that are detrimental to society.

Moreover, Hinton urged for greater transparency in AI research and development. Many of the advanced AI technologies being developed today are housed in private corporations, where profit motives could overshadow the need for ethical considerations.

Hinton believes that there should be more open dialogue between AI developers, governments, and the public to ensure that the technology is being used in ways that align with societal interests.

In addition to governance, Hinton pointed to the need for ethical AI training for developers. As AI becomes more integrated into everyday life, it is critical that those working on AI systems understand the ethical implications of their work.

Hinton’s call for responsible development includes not just technical safeguards, but also fostering a culture of ethical responsibility within the AI community.

A Call for Vigilance and Proactive Measures

Hinton’s concerns come at a critical time when AI technologies are advancing faster than ever before. His warning echoes the growing debates within the scientific community and beyond about the implications of advanced AI systems. While AI promises to bring about transformative changes, Hinton’s cautionary stance reminds us that these changes must be approached with care.

His Nobel Prize win, shared with John Hopfield, for contributions to artificial neural networks, is a testament to the potential of AI. But even as we celebrate these achievements, it is important to heed Hinton’s advice about the possible dangers.

His concerns about AI being used for harmful purposes or becoming uncontrollable are not theoretical—these are real risks that need to be addressed.

The physicist’s warning is likely to amplify ongoing discussions about AI governance, ethics, and regulation. As the world continues to grapple with the rapid evolution of AI technologies, Hinton’s voice adds a critical perspective to the conversation. His message is clear: while AI has the potential to bring about great advancements, we must remain vigilant in ensuring that it does not lead to unintended and dangerous consequences.

The 2024 Nobel Prize in Physics awarded to Geoffrey Hinton and John Hopfield for their pioneering work on neural networks underscores the tremendous impact AI has on modern life. However, alongside this achievement comes a responsibility to manage the technology’s growth carefully.

Hinton’s warnings about the potential dangers of AI serve as a timely reminder of the need for ethical consideration, governance, and proactive measures to ensure that AI is developed in a way that benefits society.

As AI continues to evolve, we must listen to the experts like Hinton who have spent their careers working on the forefront of this technology. By doing so, we can harness the full potential of AI while mitigating the risks that come with it. The future of AI is bright, but only if we approach it with caution and responsibility.