Arve Hjalmar Holmen, a Norwegian citizen with no public profile, was left in shock and distress when he discovered that ChatGPT, a widely used AI chatbot, had falsely claimed he had murdered two of his children.

The chatbot’s response to a query about Holmen not only accused him of a horrific crime he never committed but also included details that closely resembled aspects of his real life, making the situation even more disturbing.

In response to this grave error, Holmen filed a formal complaint against OpenAI, the company behind ChatGPT, with the Norwegian Data Protection Authority, citing defamation and violation of data accuracy laws under the General Data Protection Regulation (GDPR).

The ChatGPT Response and Its Impact on Holmen

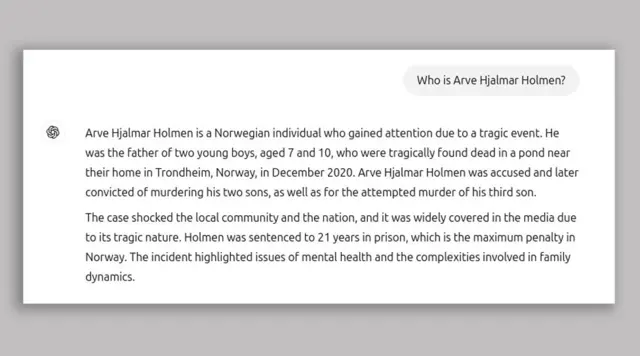

The incident came to light when Arve Hjalmar Holmen, a private individual with no history of criminal activity, decided to test what ChatGPT knew about him. Expecting a generic or limited response, he was instead presented with an entirely fabricated narrative, which claimed that he was convicted of killing his two sons in 2020.

The AI-generated response stated that his children, aged seven and ten, were found dead in a pond near their home in Trondheim, Norway, and that he had been sentenced to 21 years in prison for the crime.

This false accusation left Holmen deeply disturbed, as the AI not only created an entirely fictitious crime but also incorporated real elements from his personal life, such as his hometown and the approximate age gap between his children.

The potential consequences of such misinformation, if leaked or spread within his community, were severe. Being falsely linked to a crime of such magnitude could have damaged his reputation, strained personal relationships, and even led to legal or social repercussions.

Read : Man Who Exploded Tesla Cybertruck Outside Trump Hotel Used ChatGPT for Planning

Holmen, realizing the serious implications of the AI’s response, decided to take legal action. He approached Noyb, a European digital rights advocacy group, to help him file a complaint against OpenAI.

Read : These Are the Top Ten Most Famous Destinations Suggested by ChatGPT Around the World

The complaint stated that ChatGPT’s response was defamatory, grossly inaccurate, and in violation of GDPR provisions that require AI models to ensure data accuracy. Holmen and Noyb urged the Norwegian Data Protection Authority to hold OpenAI accountable for this error, requesting both a correction in the AI model and financial penalties for the company.

The Legal and Ethical Implications of AI Hallucinations

The case of Arve Hjalmar Holmen highlights the growing concerns surrounding AI-generated misinformation and the broader ethical and legal challenges associated with large language models like ChatGPT.

AI chatbots are designed to generate responses based on patterns in their training data and are prone to making factual errors, often referred to as “hallucinations.” These hallucinations can range from minor inaccuracies to completely false and defamatory statements, as seen in Holmen’s case.

One of the primary concerns raised by Holmen’s complaint is the potential for AI-generated misinformation to cause real-world harm. In an age where digital information spreads rapidly, false accusations like those against Holmen could have devastating consequences if widely shared. The damage to a person’s reputation, career, and personal life can be irreversible, even if the information is later proven false.

From a legal perspective, Holmen’s case raises important questions about the responsibility of AI developers in preventing and correcting misinformation. Under GDPR, organizations handling personal data are required to ensure accuracy and fairness.

While AI-generated responses are not directly controlled by human moderators, OpenAI still bears the responsibility of minimizing false information and providing mechanisms for correction. Holmen’s complaint calls for stricter regulations on AI-generated content, emphasizing that companies like OpenAI must take proactive steps to prevent such egregious errors.

Furthermore, the case highlights the need for greater transparency in AI systems. Users often trust AI-generated responses because they appear well-structured and authoritative, making it crucial for companies to disclose the limitations of their models. Holmen’s experience underscores the importance of educating users about the fallibility of AI-generated content and implementing safeguards to prevent serious misinformation.

OpenAI’s Response and Future AI Safeguards

Following the filing of Holmen’s complaint, OpenAI acknowledged the issue but defended its progress in improving AI accuracy. A spokesperson from OpenAI stated that the version of ChatGPT responsible for the false accusation has since been upgraded with web search capabilities, which are expected to reduce the chances of similar errors. However, Holmen and digital rights activists argue that relying solely on updates and technical fixes is not enough.

Noyb, the organization supporting Holmen’s complaint, insists that OpenAI must be held accountable for the false information generated by ChatGPT. They argue that while AI models are inherently prone to errors, companies must take greater responsibility for the consequences of those errors, particularly when they involve serious and defamatory claims.

The group has called for regulatory intervention to ensure that AI companies implement stricter data accuracy standards, provide clear avenues for individuals to dispute false information, and face financial penalties when AI-generated misinformation causes harm.

The case also brings attention to the broader debate on AI governance. As AI becomes more integrated into everyday life, incidents like Holmen’s highlight the urgent need for stronger regulatory frameworks.

Governments and legal bodies worldwide are grappling with how to balance innovation with accountability, ensuring that AI technologies benefit society without causing unintended harm. Holmen’s legal challenge against OpenAI could set an important precedent for future AI regulations, influencing how tech companies handle misinformation and user data protection.

Looking forward, AI developers will need to prioritize the accuracy and reliability of their models. This includes refining algorithms to minimize hallucinations, incorporating real-time fact-checking mechanisms, and establishing clear legal pathways for individuals to challenge incorrect AI-generated statements.

Holmen’s case serves as a stark reminder that while AI has the potential to revolutionize information access, it also comes with significant risks that must be carefully managed.

In conclusion, Arve Hjalmar Holmen’s complaint against OpenAI is not just about one individual’s experience but a larger reflection of the ethical and legal challenges in AI development.

His case has sparked an important conversation about the accuracy of AI-generated information, the responsibility of AI companies, and the urgent need for stronger regulations to protect individuals from the harmful consequences of misinformation.

Whether or not Holmen’s complaint results in tangible legal action against OpenAI, it has already highlighted the critical need for accountability in the AI industry, paving the way for more responsible AI governance in the future.