The case of Brooke Taylor Schinault, a 32-year-old Florida woman who pleaded no contest to making a false report to law enforcement after fabricating a break-in and sexual assault with the aid of artificial intelligence, has drawn broad concern from authorities and the public alike. The incident, which unfolded in St. Petersburg in October, represents a troubling intersection of emerging AI misuse, viral social media behavior, and the serious legal and ethical consequences of inventing violent crimes.

With law enforcement linking the hoax to a growing TikTok trend involving AI-generated images of homeless individuals inside people’s homes, the broader implications extend beyond a single criminal case and reflect new challenges facing police departments around the world. Schinault admitted she was experiencing a difficult period emotionally and fabricated the story while seeking attention, but investigators and prosecutors maintain that the false accusations diverted critical resources and risked undermining genuine victims of violent crime.

The Incident and the AI-Generated Evidence

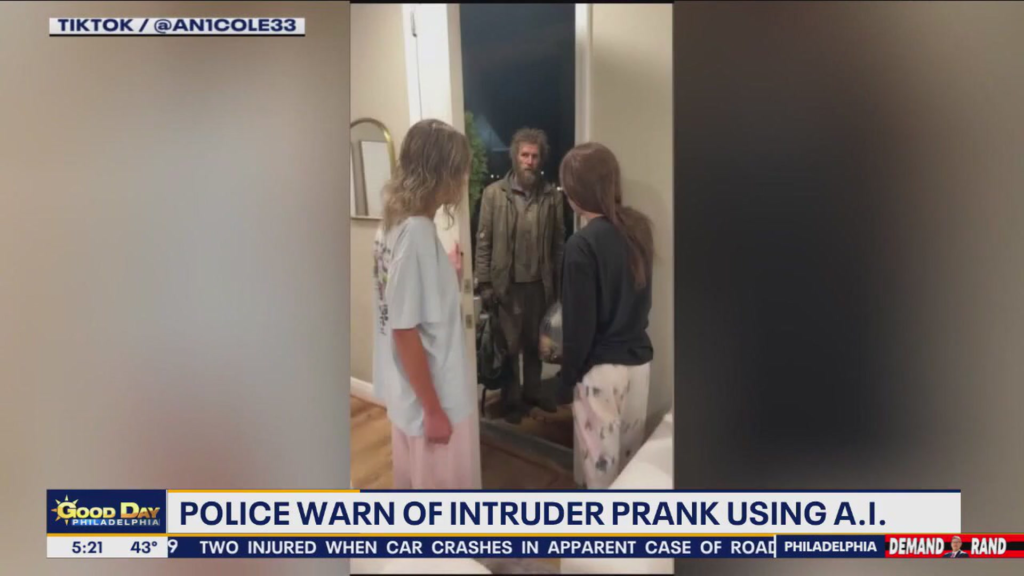

According to St. Petersburg police, Brooke Taylor Schinault first called 911 on 7 October, reporting that an unidentified man had broken into her home. Officers responded immediately, but upon arrival they could find no evidence of forced entry or any disturbance consistent with her claim. To support her allegation, Schinault showed police a photograph of a man she described as the intruder. Detectives later confirmed that the image, which depicted a homeless man, was artificial intelligence-generated.

Shortly after the initial response, Brooke Taylor Schinault made a second call to police, this time claiming that she had been sexually battered by the same intruder. The escalation of her report prompted officers at the scene to consult with a detective who began reviewing the evidence remotely. The detective quickly identified the photograph as matching a pattern observed in other recent cases linked to a TikTok trend involving AI-created images of homeless individuals digitally placed inside private homes.

This online phenomenon, dubbed the “AI homeless man” prank, had already surfaced in Texas, Washington, and England, with several cases resulting in false police calls similar to Schinault’s. Investigators searched Schinault’s devices and discovered that the AI-generated image she provided to officers had been created days before she claimed the attack occurred. The file was found in a deleted folder, further supporting the department’s assessment that the entire story had been fabricated.

NEW: Florida woman admits using a homeless man’s image from a viral AI prank to file a fake s*xual assault report

— Unlimited L's (@unlimited_ls) December 7, 2025

Brooke Schinault, 32, pleaded no contest after reporting a fake home invasion and s*xual assault

She was sentenced to probation and ordered to pay a fine

Police… pic.twitter.com/DOO3CJGdGV

According to charging documents, police concluded that the image had been produced through ChatGPT’s integrated visual tools, though Schinault denied knowingly participating in any TikTok trends. She later told officers she had lied about the break-in and the alleged sexual assault because she was experiencing depression and seeking attention during a difficult period in her life.

The TikTok Trend and Its Growing Consequences

While Brooke Taylor Schinault maintains she did not follow any online trends, police and prosecutors have taken the position that her actions mirrored a widely circulating TikTok challenge that uses artificial intelligence to generate photo-realistic images of homeless individuals inside private residences. The prank’s intention among social media users is typically to shock friends or family members into believing that a stranger has entered their home. These images are produced using widely accessible AI tools that allow even novice users to create highly convincing fabricated photographs within seconds.

Law enforcement agencies have issued warnings in multiple jurisdictions as the trend has gained popularity, noting that AI-assisted hoaxes divert essential resources and can significantly delay responses to genuine emergencies. In some documented cases, frightened individuals have contacted police believing the fabricated images to be authentic, leading authorities to conduct full break-in or trespassing investigations before discovering that the images were synthetic.

Officers and advocates for the unhoused have also condemned the trend as dehumanizing, pointing out that using AI-generated portrayals of homeless individuals as props for amusement contributes to harmful stereotypes and exploits an already vulnerable population. The growing realism of AI photography complicates these concerns further, as law enforcement and the general public must now contend with a new layer of digital misinformation.

In Brooke Taylor Schinault’s case, the combination of a fabricated violent crime and an AI-generated suspect image raised the severity of the situation. False reports of sexual assault are treated with particular gravity because they can erode public trust, discourage actual victims from coming forward, and potentially lead to wrongful suspicion of innocent people. The broader context of the TikTok trend reinforced the sense that technology-driven misinformation can easily spill into real-world criminal investigations.

Legal Outcome and Implications for Law Enforcement

Following her admission that no break-in or sexual assault had occurred, Schinault entered a no-contest plea to a charge of making a false report to law enforcement. She was placed on probation and ordered to pay a fine. Because the charge relates to knowingly providing fabricated information to police, the penalty reflects the legal system’s attempt to balance accountability with the acknowledgment that she had been struggling with mental health issues at the time. A phone number associated with Schinault in police documents has since been disconnected, and efforts to reach her public defender for comment have not been successful.

Read : Who is Crystal Terese Wilsey, the Cinnabon Worker Fired After Mocking Somali Woman’s Hijab?

The case illustrates the increasing complexity facing law enforcement as artificial intelligence becomes more advanced and more widely available. Detectives in St. Petersburg indicated that identifying the AI-generated photograph quickly helped them avoid spending additional hours on a false lead, but they also acknowledged that the trend poses new challenges for investigators. As more individuals gain access to tools capable of creating near-perfect synthetic images or audio, police departments must adapt their investigative techniques to detect falsified digital evidence in real time.

Beyond the technical aspects, the case raises deeper societal questions about how AI may shape public behavior, emergency response, and even criminal intent. If individuals can fabricate visual or audio evidence convincingly enough to deceive even experienced officers, the potential for harm extends far beyond isolated incidents. Misinformation that once required sophisticated tools or significant planning can now be produced in minutes, increasing the risk of false accusations, wasted emergency resources, and public confusion during critical situations.

Authorities have emphasized that deliberately generating false reports—whether or not artificial intelligence is involved—remains a criminal offense. The use of AI merely amplifies the seriousness of the act when fabricated images or audio are used to strengthen a false claim. Police departments in multiple countries have urged social media users not to engage with these trends, highlighting past cases in which first responders were dispatched unnecessarily, tying up personnel who could have been responding to legitimate emergencies.

While Schinault’s case did not lead to any arrests of innocent individuals, its outcome underscores how easily misinformation can cross the threshold from online entertainment to real-world criminal investigation. It also serves as a reminder that the legal framework governing false reports remains firmly in place regardless of technological advances. For law enforcement agencies grappling with the expanding influence of AI, this incident reinforces the importance of training officers to recognize and analyze digitally generated evidence.

As artificial intelligence continues to evolve, incidents like this one offer a preview of the broader challenges ahead. The ability to fabricate convincing images has already reshaped online culture, but as Brooke Taylor Schinault’s case demonstrates, the consequences can extend far beyond social media. Moving forward, the intersection of AI, law enforcement, mental health, and public behavior will likely demand new strategies from authorities and greater awareness from the public about the real-world implications of digital misinformation.

PlayCityCasino isn’t too shabby. The site loads quickly, and they seem to update their games pretty regularly. Customer support was helpful when I had a question about a bonus. Check them out at playcitycasino.