Jerome Dewald, a 74-year-old entrepreneur representing himself in a legal dispute, found himself at the center of controversy when he Employs AI Avatar During Legal Appeal in a New York court.

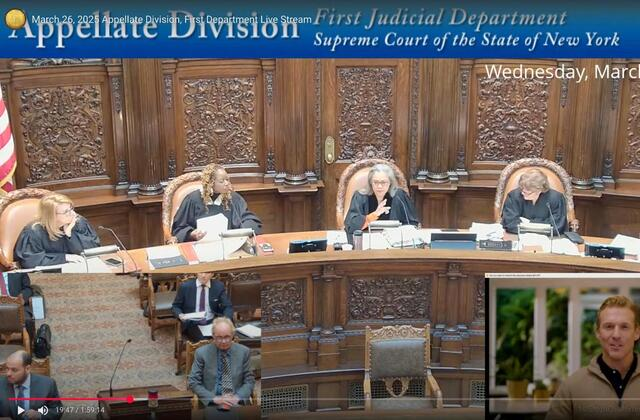

The incident, which unfolded on March 26 during a hearing in front of the Appellate Division’s First Judicial Department, highlighted both the growing presence of artificial intelligence in the legal field and the potential pitfalls of its use in formal judicial settings.

Though Jerome Dewald intended to use the AI-generated video to present his argument more effectively, the unexpected use of a digital persona backfired, earning a harsh rebuke from the presiding judge and drawing widespread attention to the legal system’s readiness — or lack thereof — for AI integration.

A Virtual Appearance That Shocked the Courtroom

Jerome Dewald had been engaged in a legal dispute with a former employer and was appealing a decision handed down by a lower court. Allowed to make his case personally, Dewald submitted a prerecorded video as part of his presentation, hoping it would help convey his arguments more clearly than an in-person delivery.

When the video began to play, what appeared on the screen was not Dewald himself but a much younger-looking man in a blue shirt and beige sweater, standing against a digitally blurred background. The virtual figure spoke confidently, presenting the legal arguments on Dewald’s behalf.

This unexpected appearance caused immediate confusion in the courtroom. One of the judges, Justice Sallie Manzanet-Daniels, asked Dewald who the speaker in the video was. When Dewald explained that the figure was not a real person but an AI-generated avatar he had created, the judge’s tone quickly shifted from confusion to frustration.

“It would have been nice to know that when you made your application,” she said pointedly, before ordering the video presentation to be shut down.

Read : Donald Trump Shares Bizarre AI Video of Gaza in 2025: Watch

The reaction was not just about the surprise element — it touched on deeper concerns around transparency and courtroom decorum. The judge added, “I don’t appreciate being misled,” making it clear that Jerome Dewald’s failure to disclose the nature of the video had seriously undermined his credibility.

Though the court had allowed him to submit a video, the implicit understanding was that it would be an authentic representation of Dewald himself, not a synthetic creation.

Missteps, Intentions, and a Sincere Apology

Following the hearing, a deeply embarrassed Dewald sent a written apology to the judges, admitting that his actions had “inadvertently misled” the court. He explained that his original plan had been to create a digital version of himself, but technical difficulties forced him to use a generic, AI-generated persona instead.

His intent, he clarified, was not to deceive but to deliver his arguments in a clearer, less nerve-wracking way. Past experiences in court had seen him falter under pressure, stumbling over words and losing his train of thought. The AI video, he believed, would help him overcome these challenges.

Read : 10 Countries That Are Totally Prepared for Artificial Intelligence

In his letter, Jerome Dewald acknowledged that he should have informed the court about the use of AI beforehand and accepted full responsibility for not being transparent. He emphasized that he had no intention of undermining the court’s integrity.

“My intent was never to deceive but rather to present my arguments in the most efficient manner possible,” he wrote. “However, I recognize that proper disclosure and transparency must always take precedence.”

Despite the controversy, Dewald eventually went on to present his argument live in court. Visibly nervous, he stammered through his statements and frequently paused to consult notes on his cellphone. His effort to pivot back to a traditional presentation was evident, but the incident had already left a lasting impression on the court and those observing.

The Broader Implications of AI in the Legal System

While Jerome Dewald’s case may seem like an isolated instance, it reflects a larger, rapidly evolving issue — the intersection of artificial intelligence and the legal system. The idea of using AI tools in legal proceedings is not new, and there have already been several high-profile cases of lawyers and litigants facing backlash for relying on AI-generated content.

In 2023, a New York attorney was sanctioned after submitting a legal brief containing fabricated citations created by ChatGPT. That same year, Michael Cohen, a former associate of President Trump, found himself in hot water after presenting phony legal references sourced from Google Bard.

These incidents highlight the promise and peril of integrating AI into the legal process. On one hand, AI can provide valuable assistance to those who cannot afford legal representation, offering quick access to legal research, document drafting, and more. On the other hand, the risk of inaccuracy and the temptation to use AI in ways that mislead or circumvent court protocols present serious ethical and procedural challenges.

Experts warn that current AI tools — including large language models and video generation software — can still “hallucinate,” producing outputs that look convincing but are factually incorrect or legally irrelevant.

“That risk has to be addressed,” said Daniel Shin, assistant director of research at the Center for Legal and Court Technology at William & Mary Law School. Shin noted that while AI can be a useful supplement, it cannot be a substitute for real legal expertise, human discretion, and proper courtroom procedure.

For courts, this means adapting to the new technological landscape with clear guidelines and policies. Should AI-generated materials be allowed in legal proceedings? If so, what kind of disclosures must accompany them? What penalties should apply to those who fail to inform the court about their use of such technologies?

As of now, there is no universal framework for addressing these questions. Courts operate on the principles of fairness, transparency, and authenticity — principles that can be jeopardized when synthetic content is introduced without proper context or explanation. Jerome Dewald’s misstep, however well-intentioned, serves as a cautionary tale for the broader legal community.

A Lesson for the Future

Jerome Dewald’s decision to employ an AI avatar during his legal appeal in a New York courtroom has triggered a wider discussion on the ethical boundaries and procedural rules surrounding artificial intelligence in the justice system.

While Dewald did not intend to mislead, his case illustrates the importance of full disclosure, the risks of relying on emerging technology without understanding its limitations, and the legal system’s growing discomfort with non-traditional methods of argumentation.

As AI continues to evolve and become more accessible, both litigants and courts must tread carefully. The technology holds great potential, especially for self-represented individuals like Jerome Dewald who seek support in navigating complex legal environments. But transparency, trust, and integrity remain non-negotiable values in any courtroom.

Moving forward, courts may need to issue clearer guidance on what constitutes acceptable use of AI and how to properly disclose such use. For individuals hoping to use AI to assist in their legal matters, the message is clear: use with caution, disclose with clarity, and never let technology obscure the fundamental principles of justice.

In the end, Dewald’s experience may not just be a personal embarrassment but a critical milestone in the conversation about how AI should — and should not — be integrated into one of the most human institutions of all: the court of law.